Welcome to my website! I am a technologist, research scientist, and software engineer with 10 years of experience in Artificial Intelligence, Machine Learning, and Cognitive Neuroscience, specializing in foundation models towards AGI, Bayesian Inference, probabilistic programming, and their industry applications.

Currently I am employed as an Applied Scientist at AWS, located at Seattle.

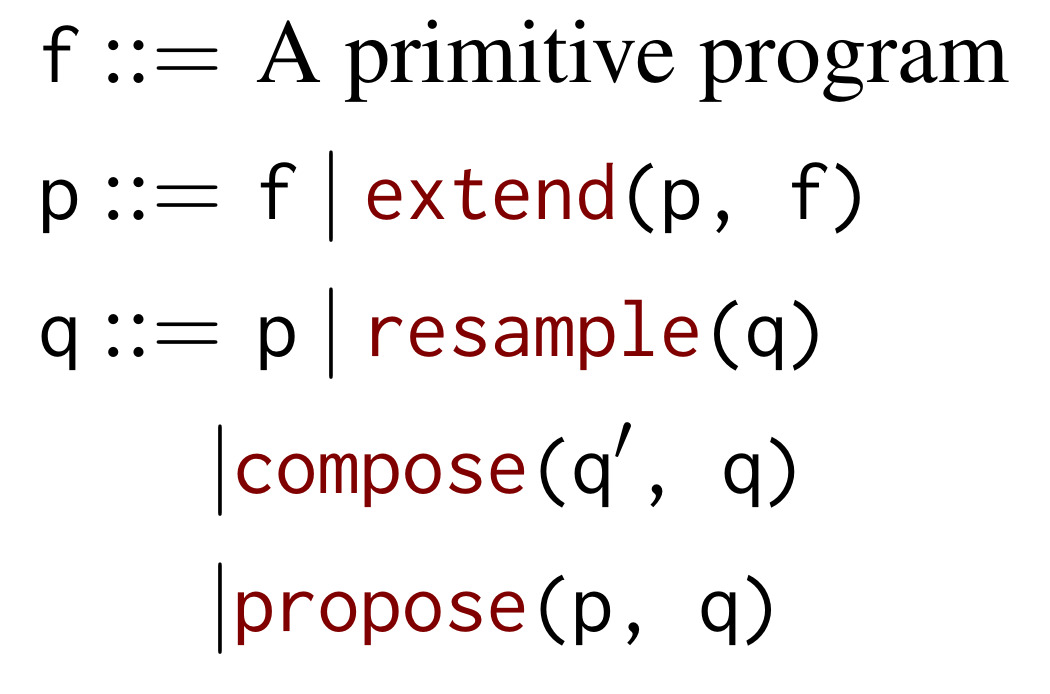

Previously I got my Ph.D degree in Computer Science at Northeastern University, where I was advised with Prof. Jan-Willem van de Meent. Before that I had my M.Sc in Computer Science at University of Virginia and my M.Sc in Applied Mathematics from University of Washington.

News

Oct 2024 : Our short paper on conditional mixture networks got accepted at NeurIPS workshop on Bayesian Decision-making and Uncertainty!

Oct 2024 : I am excited to be joining AWS as an Applied Scientist!770

Sep 2024 : Our paper on predictive coding got accepted at Neurips 2024!

Selected Publications

E-Mail

E-Mail Github

Github G. Scholar

G. Scholar Twitter

Twitter Curriculum Vitae

Curriculum Vitae